Google Drive connector as a destination

The ETL pipeline, to transfer customer data from a Langstack entity to a csv file on Google Drive, is created as follows.

To see step-by-step instructions of how to create an ETL pipeline, click here.

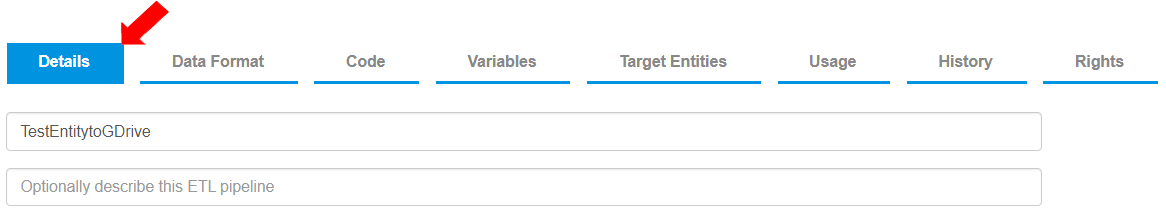

An ETL pipeline named “TestEntitytoGDrive” is created.

To connect to the data source, the necessary information is added in the Data source section:

The “Entity” tab is selected.

The “Test_Customers_Entity” entity is selected from the drop-down menu.

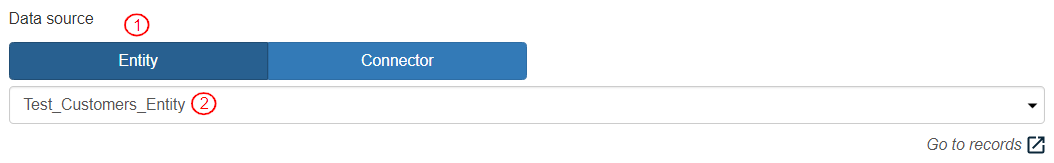

To connect to the data destination, the necessary information is added in the Data destination section:

The “Connector” tab is selected.

For this example, “GDrive123” is selected from the drop-down menu. The connector can be added by selecting a connector from the drop-down menu or a new connector can be created by clicking the [+] button.

To go to the settings, click the “Edit the settings” arrow.

To disallow multiple simultaneous runs of the ETL pipeline, the toggle button is left enabled for “skip execution while in progress”. Enabling this toggle button defines that the execution of this ETL pipeline will be skipped when there is one already in progress.

The default selection for ETL pipeline execution is “Immediate”.

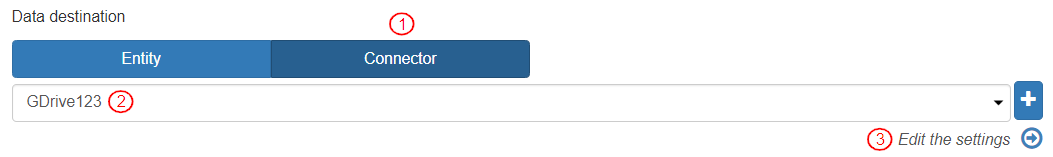

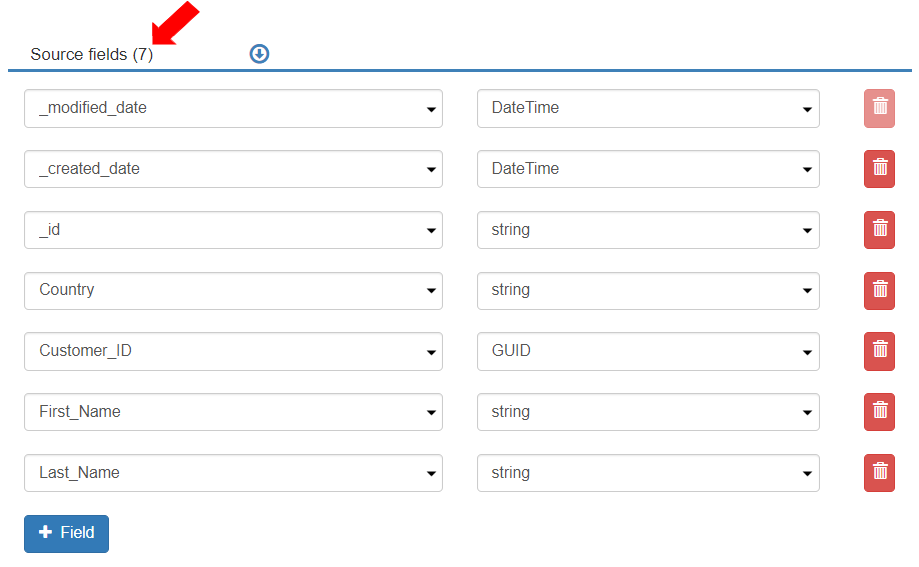

To align the source fields with destination fields, the settings for the reader and writer format are defined in the “Data Format” tab. The “Reader” tab is selected by default. For this example, the fields are added as per the image below.

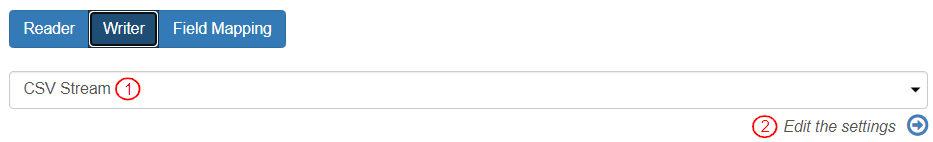

To update the settings for how the data should be written, select the “Writer” tab.

The writer stream is “CSV Stream.”

To add the table name, click on the “Edit the settings” arrow.

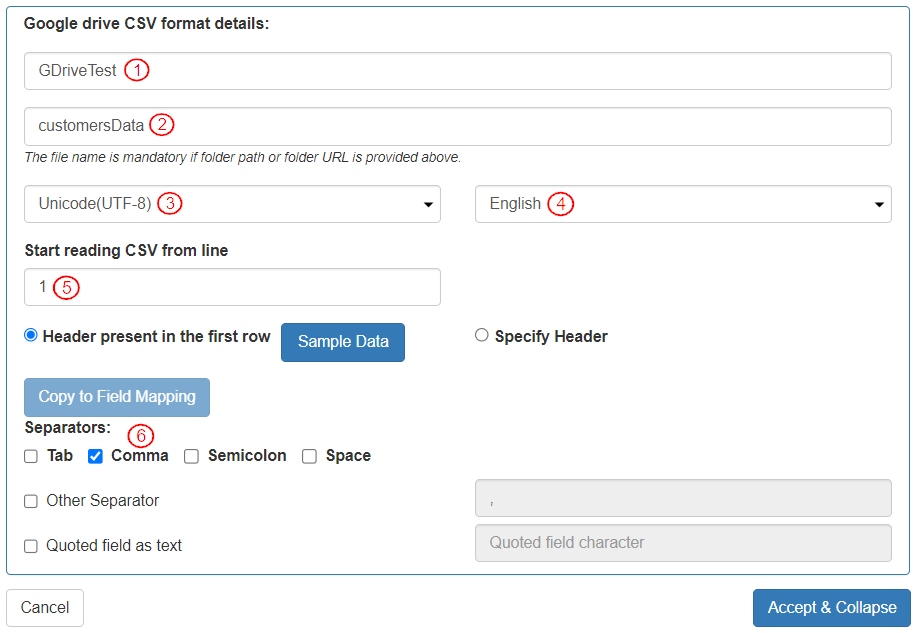

To add details necessary to write the records, the settings in this section “Google drive CSV format details:” are defined as follows:

In the field “File path or File URL or Folder path or Folder URL” the Google drive folder name is entered "GDriveTest".

The “File name” is entered as “customersData”.

The “CharacterSet” is selected as “Unicode(UTF-8)”.

The “Language” is selected as “English”.

The “Start reading CSV from line” is defined as “1”.

The “separator” is selected as “Comma”.

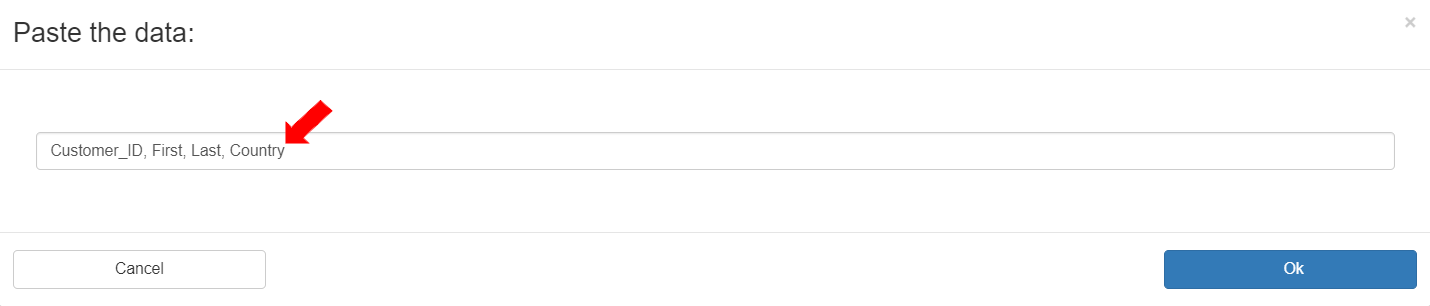

In the “Sample data”, the column names of the entity are pasted: “Customer_ID,First,Last,Country”.

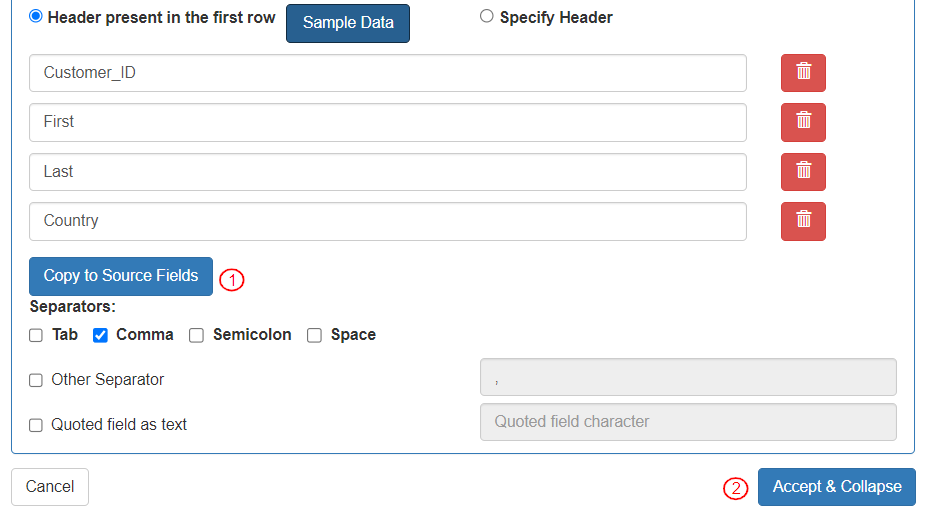

Once the sample data is added, the writer fields are displayed:

The column names are copied to Field Mapping by clicking “Copy to Field Mapping”.

Click on "Accept & Collapse" to save the information.

The “Writer” mode is “Append”.

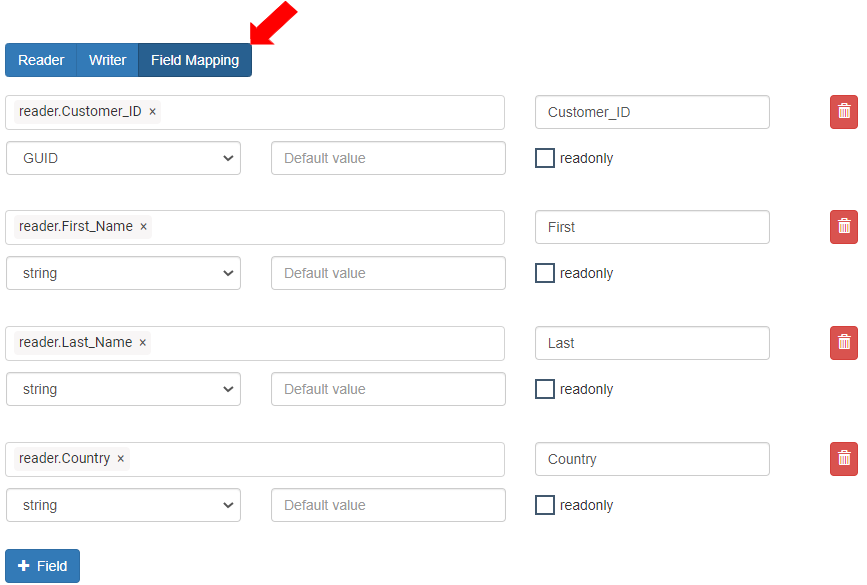

In the Field Mapping section, all the “Mapped Fields” are aligned.

When the ETL pipeline is executed (after Save and Publish), the records will be added to the destination.

Last updated