Exercise 2: ETL pipeline, CSV source & FTP Connector

In this exercise, store data in an Entity.

Requirements

Store data through the ETL pipeline.

Source data is in a CSV file.

Use an FTP tool as a connector. File must be residing on the FTP server before the ETL pipeline process starts.

Store the data from the CSV file in the entity “UserName_Acc_customers”.

Solution

For this exercise, place the source file on the SFTP connector. Then define Reader, Writer, and Field Mapping settings in the ETL pipeline. Finally, run the ETL pipeline to store the details in the destination Entity.

The image below illustrates a data source being an FTP connector for a CSV file, and the Destination is an Entity.

For this exercise, use a CSV file with the following fields containing customer information.

Address

City

Country

Customer_ID

Email

First_Name

IsActive

Join_Date

Last_Name

Phone

Zip_Code

The data used for this exercise is not real and is test data that has been auto-generated by http://www.convertcsv.com/generate-test-data.htm. The format of the dates generated in the test data is inconsistent and needs to be fixed to be aligned with the target entity's date format, i.e., YYYY-MM-DD.

Open Filezilla and connect.

Place the CSV file in the remote connecting site.

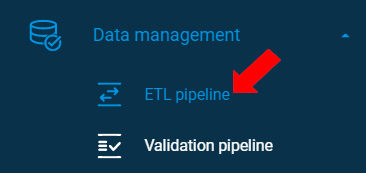

To go to the ETL pipeline template, click on “ETL pipeline” under the “Data management” menu on the left side panel.

To create a new ETL pipeline, click the [+ ETL pipeline] button under the “ETL pipeline” tab.

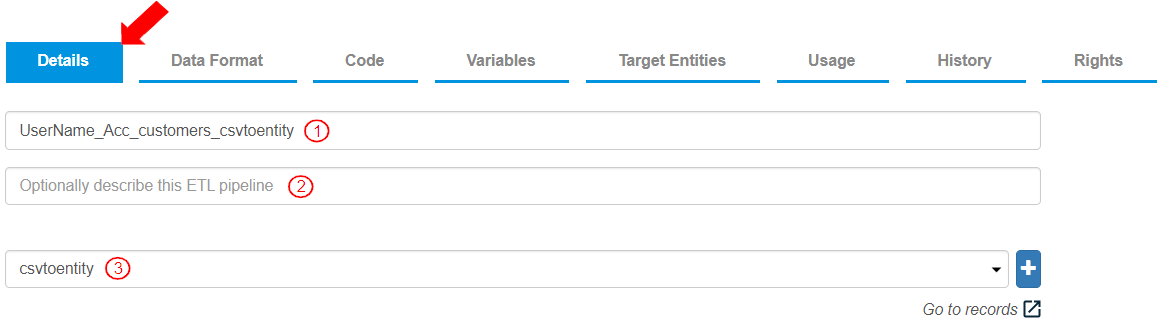

To label the ETL pipeline and execution log entity, add the following information:

Enter the “ETL pipeline” name as “UserName_Acc_customers_csvtoentity”.

Optionally add a description for this ETL pipeline.

Enter a name for the execution log for this ETL pipeline as “csvtoentity”. This log will be updated each time the ETL pipeline is executed and can be viewed by clicking “Go to records”.

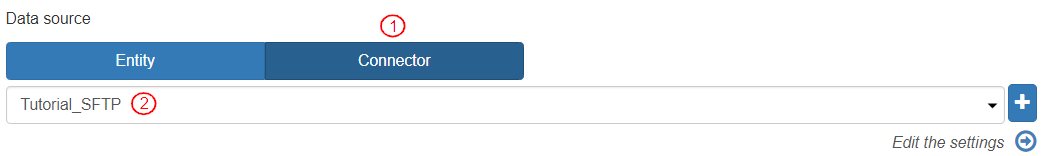

To connect to the data source, add the necessary information in the “Data Source” function. To add an existing connector:

Select the “Connector” tab.

Select the relevant connector source. In this exercise, “Tutorial_SFTP” is selected. (The source can be selected from the drop-down menu or a new source can be added by clicking the [+] button and adding relevant details.)

To edit the settings, click the “Edit the settings” arrow. In the settings, to add or update the details for the FTP/SFTP Connector, update the required fields (click here for the steps). If a connector does not exist, to create a new connector In the Data Source in the ETL pipeline, click the [+] button and add the required fields (click here for the steps to create a connector).

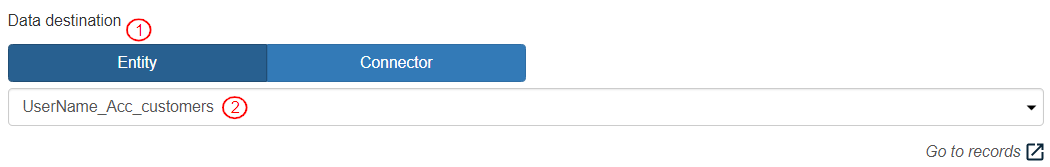

To add the necessary details to connect with the data destination, in the “Data Destination” section:

Select the “Entity” tab.

Select Source as “UserName_Acc_customers”.

To disallow multiple requests simultaneously, leave the toggle button enabled for “skip execution while in progress”. Enabling this toggle button defines that the execution of this ETL pipeline will be skipped when there is one already in progress.

The default value for ETL pipeline execution is selected as “Immediate”. For this exercise, keep it as is.

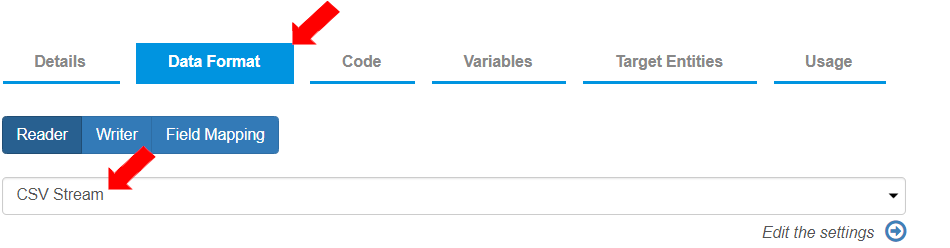

To define the Reader (reading from the source) and Writer (writing on the destination) settings, go to the “Data Format” tab. To define the stream, select “Reader Stream” as “CSV Stream”.

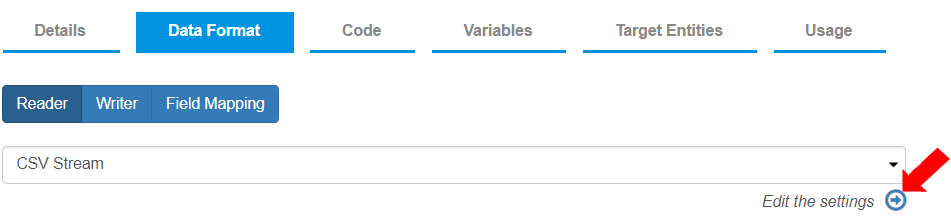

To edit the settings for CSV stream, click on the arrow besides "Edit the settings".

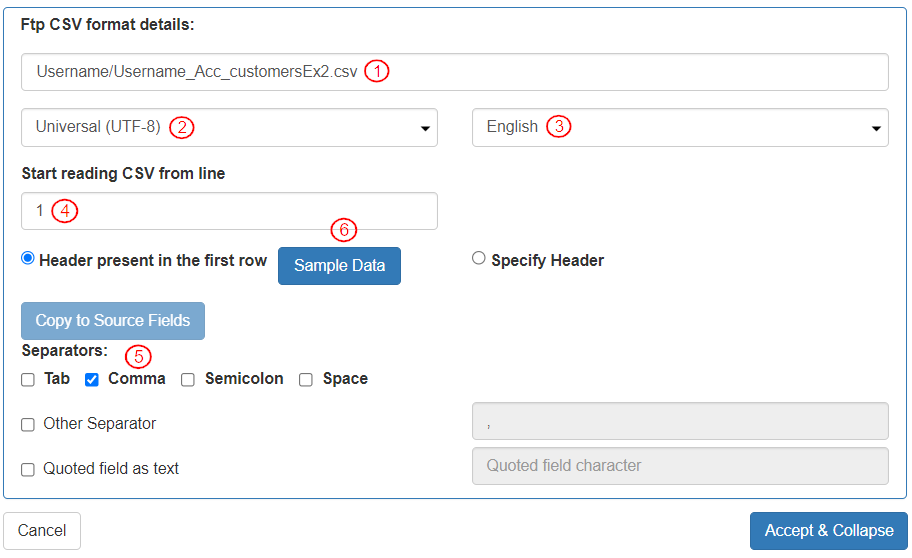

To specify the “Reader stream”, define the following settings in the “FTP CSV Format Details” box:

Add the exact file path. This is the path to the file from which the CSV data will be read.

Select “Character Set” as “Universal (UTF-08)”.

Select “Language” as “English”.

To define that the data is read from the first row, in the “Start reading CSV from line” field, enter the digit “1”. The field “Header present in the first row” is selected by default.

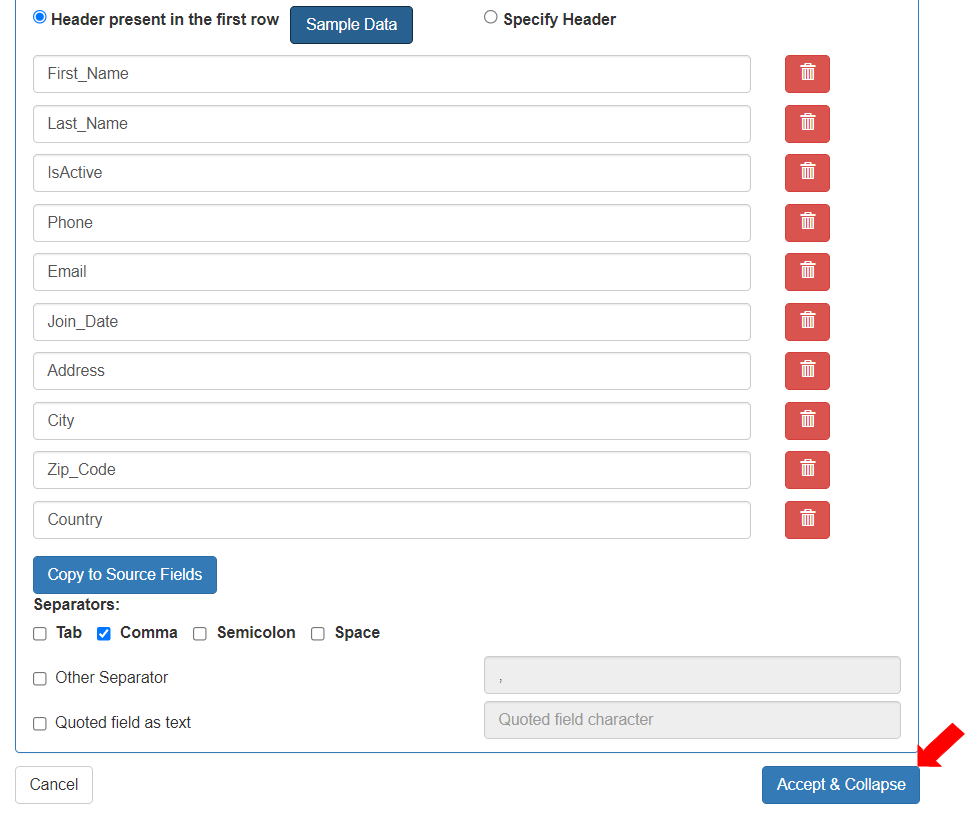

To define the separator based on which the fields are distinguished, select Separator as “Comma”. The [Sample Data] button gets activated.

To define the sample entity fields, click the [Sample Data] button.

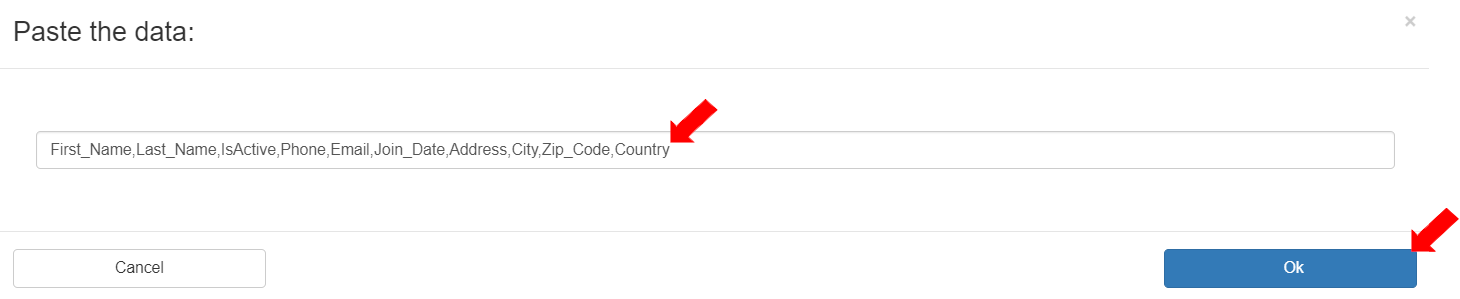

When the [Sample Data]button is clicked, a box will be displayed to add the header file sample that is in the CSV file. To define the fields present in the target entity, add the header file sample as follows:

First_Name,Last_Name,IsActive,Phone,Email,Join_Date,Address,City,Zip_Code,CountryThe header sample will contain the details of the fields to be read from the target Entity. To save the details, click the [OK] button.

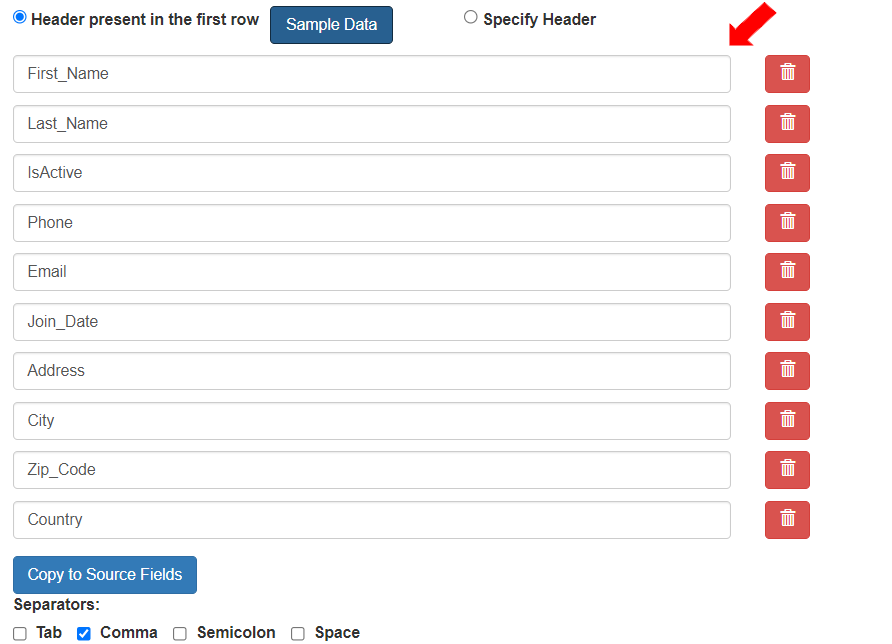

The entity's fields will be displayed (based on the sample data entered).

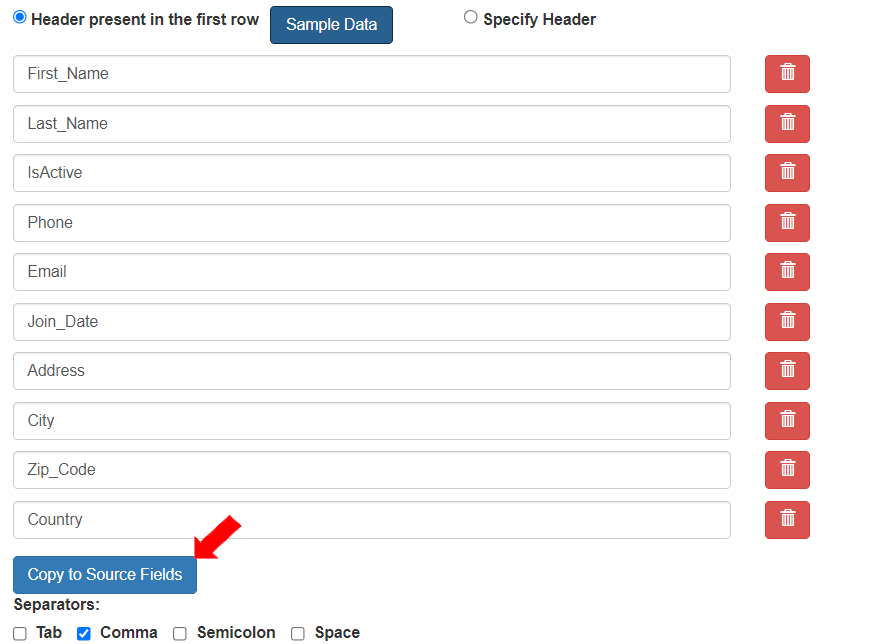

To copy the CSV fields to Source fields in the entity, click the activated [Copy Sample to Source Fields] button. Fields in the source entity will be populated.

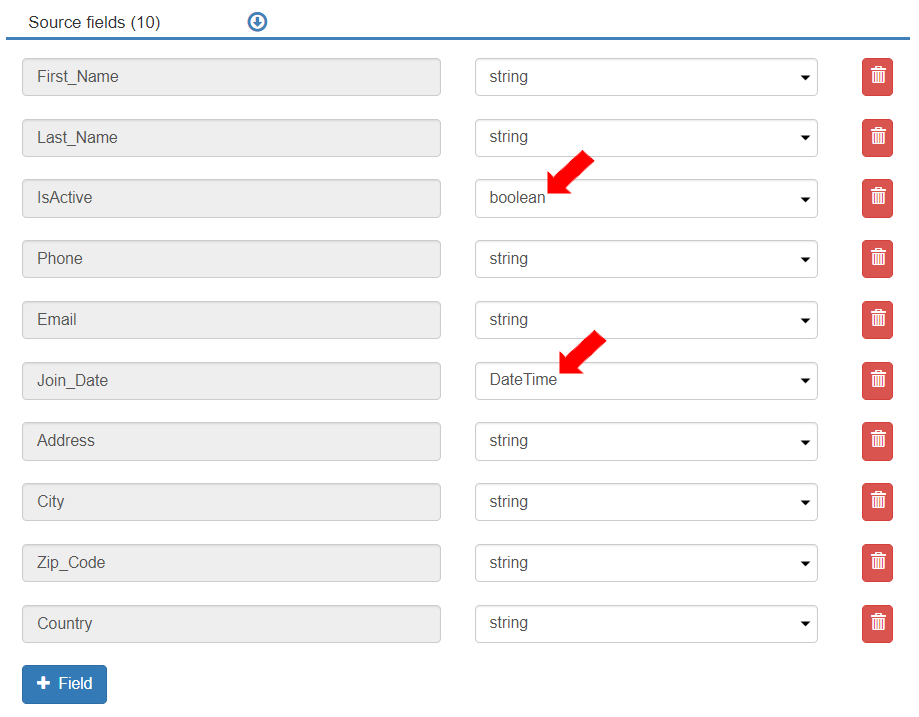

The fields will be populated with string data types in the Source fields section. Adjust the data types of the fields “IsActive” and “Join_Date” to align with data types of the target entity. (The field's data type can be selected, unnecessary fields can be deleted and further fields can be added as required.)

To accept the Ftp CSV format details settings, click the [Accept & Collapse] button.

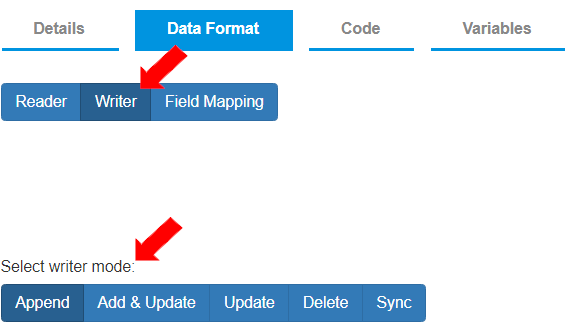

After the “Reader” settings are defined, to define “Writer” settings, select the Data Format>Writer tab. To define that the data is written to the destination as mentioned in reader source fields without transforming it, select “Writer Mode” as “Append”.

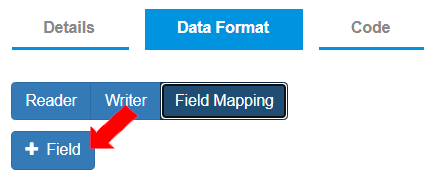

To map the reader source fields to the entity destination fields, select the “Field Mapping” tab. To add the Mapped Fields, click the [+ Field] button and add relevant information.

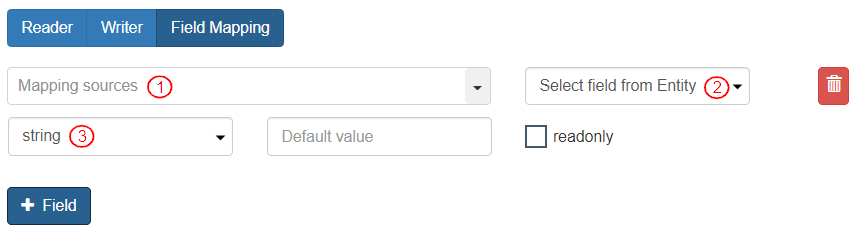

In case of CSV files, the sequence of fields top-down must be exactly the same as the sequence specified in the Reader.

Mapping Sources: Select the field specified in the Reader. Select Variables>Reader>(field name). It displays as “reader.(Field Name)”.

Select field from Entity: Select mapping field from the Entity.

Data Type: Select the data type for the mapping fields.

Add all required fields in the “Field Mapping” section except Customer_ID (as that is auto-generated):

First_Name

Last_Name

IsActive

Phone

Email

Join_Date

Address

City

Zip_Code

Country

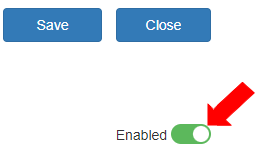

To save the ETL pipeline, click [Save].

To publish the ETL pipeline, click [Publish].

Ensure the ETL pipeline is enabled.

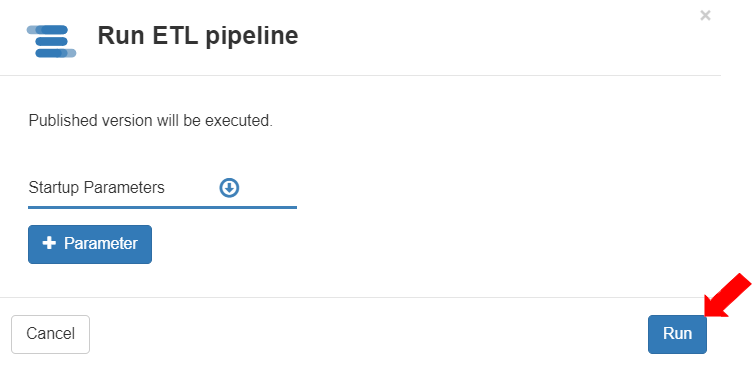

Click the [Run] button.

The Run ETL pipeline dialog box displays. Click [Run] to execute the ETL pipeline.

The ETL pipeline will run on the date and time specified.

The records will be added to the destination.

Last updated